Concepts

Regardless of whether or not we're formally making art, we are all living as artists.

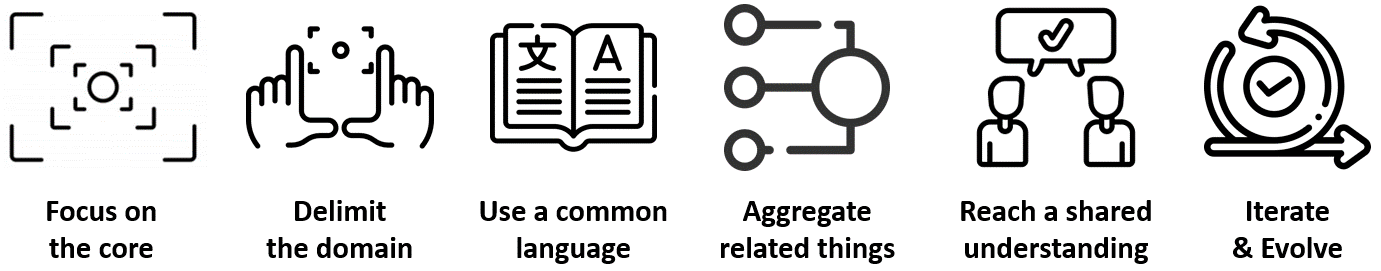

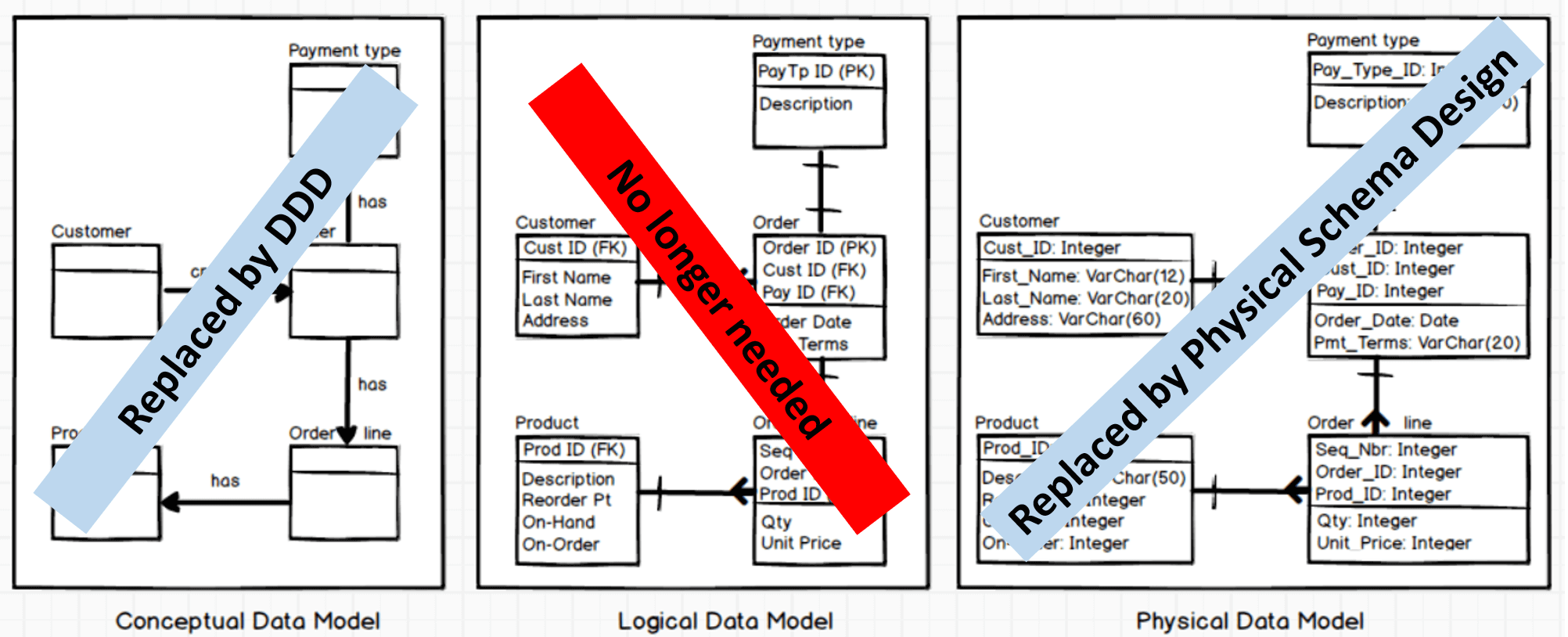

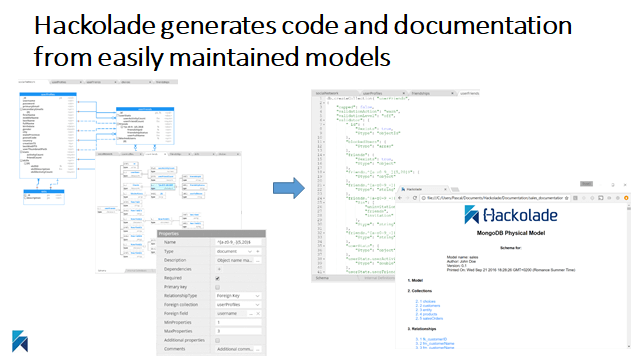

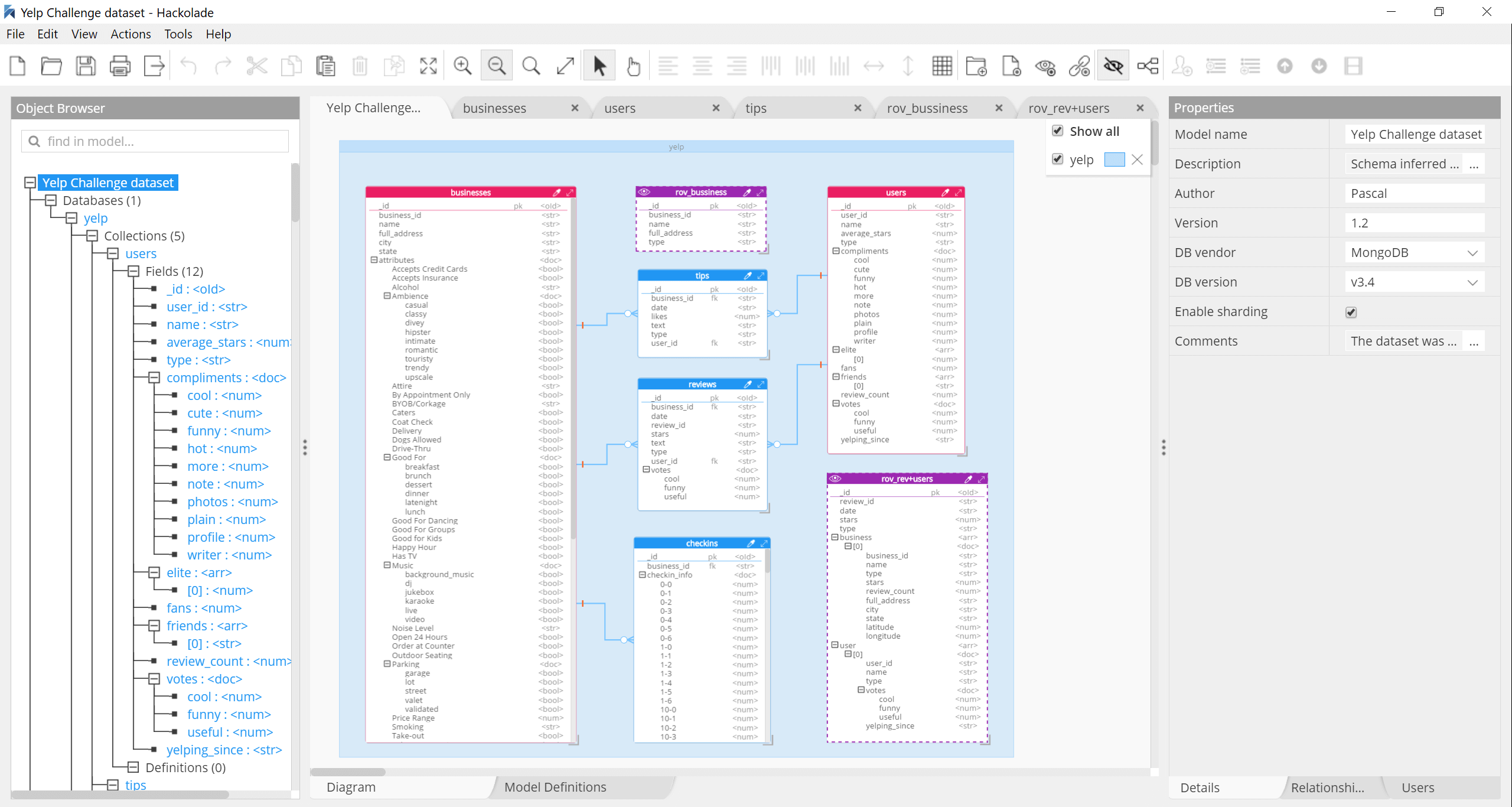

Traditional data modeling must evolve to remain relevant. There's a pragmatic way to perform data modeling, using our next-gen Hackolade Studio data modeling tool. We call this approach "Domain-Driven Data Modeling".

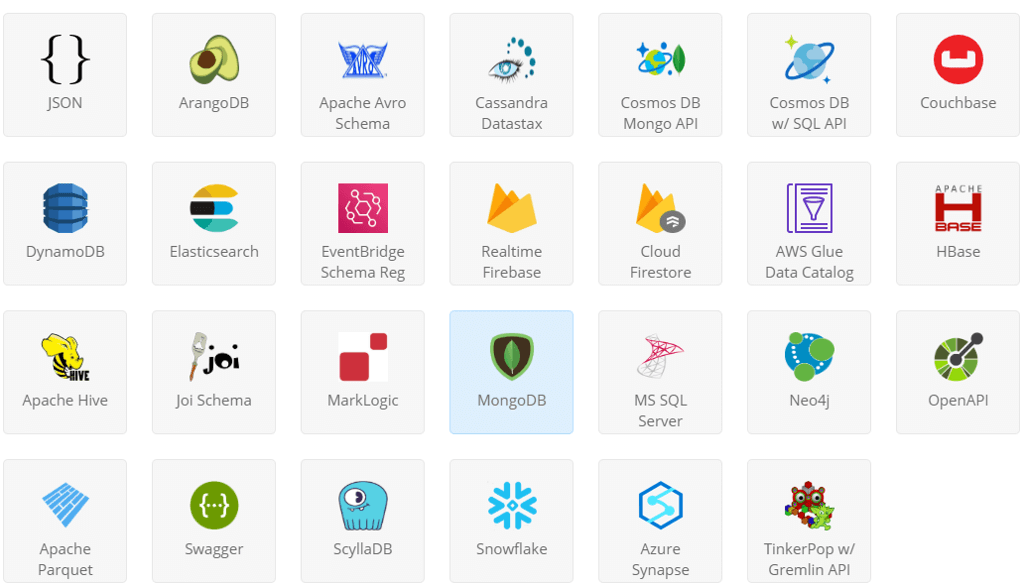

Polyglot Data Modeling creates a level of technology-agnostic data modeling that sits across the traditional boundaries between conceptual/logical and logical/physical data modeling.

Model, schema, metadata: they concern different teams.

Similar to the fact that models represent a conversation between business and IT, you could argue the degree to which we have more or less schema in our NoSQL data stores is also representative of the interaction between IT and its systems.

Model, schema, metadata: these three terms articulate very different concepts in the data modeling profession, and as such, we should take deliberate care when using them

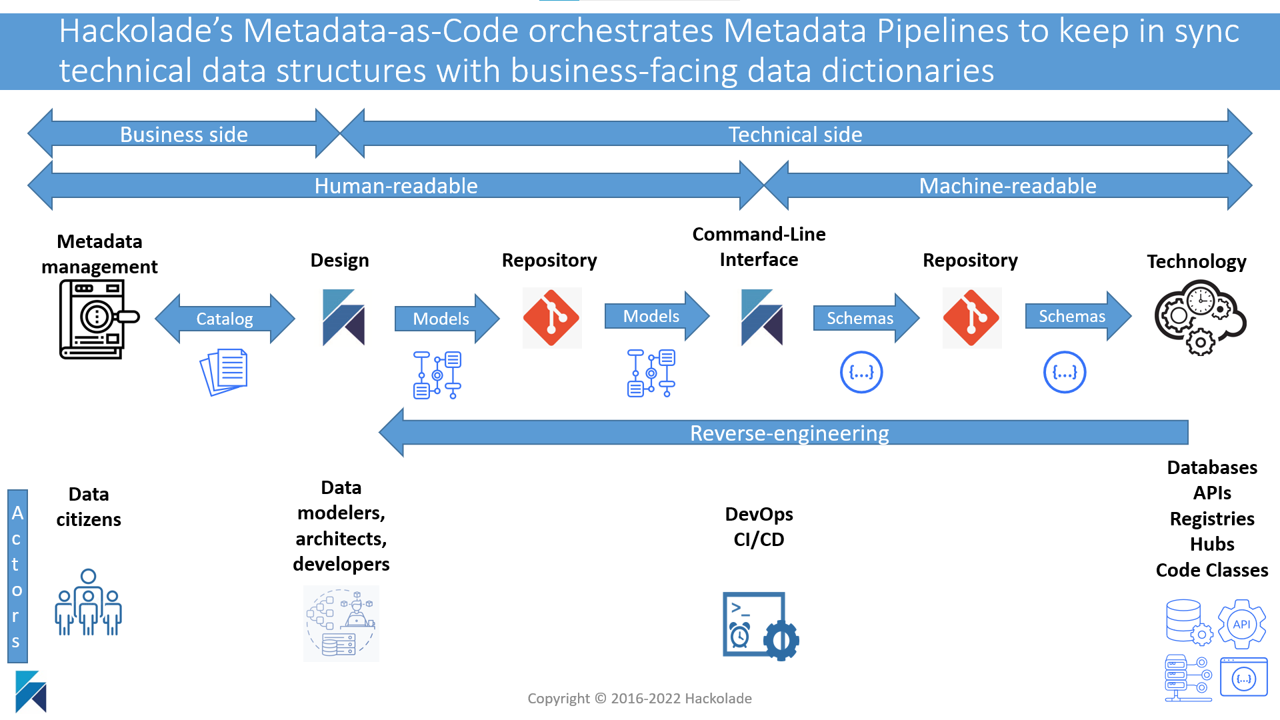

Metadata-as-Code as the antidote to Agile Angst

After having spent 10+ years in the wonderful world of Neo4j, I have been reflecting a bit about what it was that really attracted me personally, and many - MANY - customers as well, to the graph.

ChatGPT is a natural language processing (NLP) tool that uses machine learning to generate human-like responses to text-based inputs. We asked some basic questions to ChatGPT about the field of data modeling. The unedited answers are impressive...

Data modeling is even more important today than before with relational databases. But we need a more nimble and focused kind of data modeling to fit the challenges of agile development, DevOps, cloud migrations, and digital transformations.

Customers leverage the Command-Line Interface, invoking functions from a Jenkins CI/CD pipeline, from a command prompt or with a Docker container. Combined with Git as repository for data models and schemas, users get change tracking, peer reviews, versioning with branches and tags, easy rollbacks, as well as offline mode.

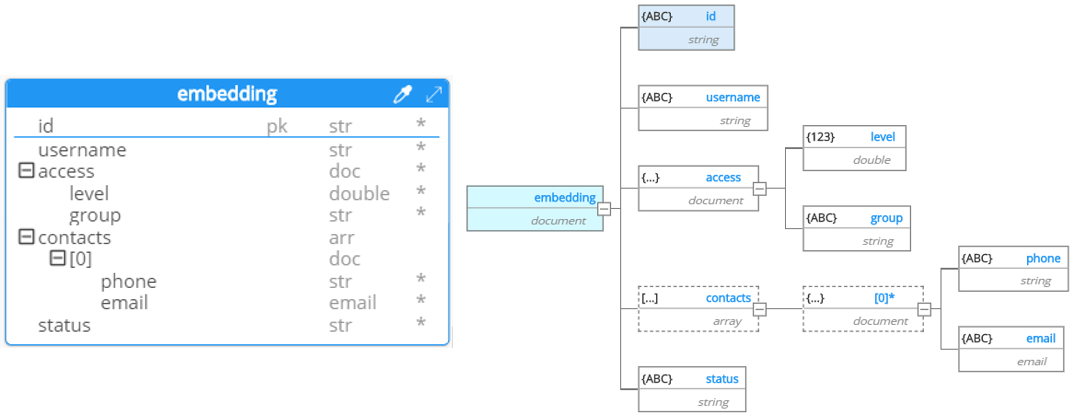

Polyglot Data Modeling is data modeling for polyglot persistence and communication. It is inspired by the role of logical data models, except that it allows denormalization, complex data types, and polymorphism.

Reality or not, the perception nowadays is that data modeling has become a bottleneck and doesn't fit in an agile development approach.

Reports of NoSQL's death are greatly exaggerated!...

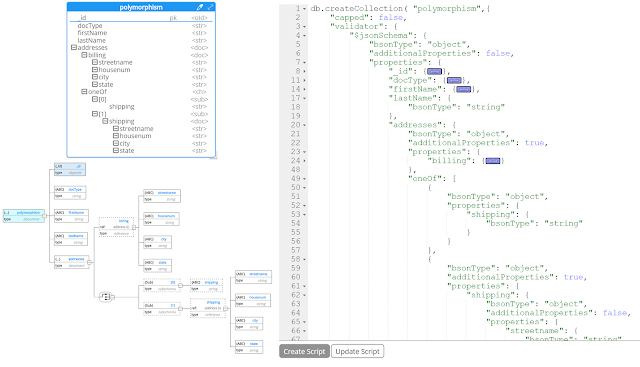

The MongoDB $jsonschema validator becomes the equivalent of a DDL (data definition language) for NoSQL databases, letting you apply just the right level of control to your database.

New-generation developer productivity tools allow for taking full advantage of agile development and MongoDB: IDE’s (Integrated Development Environments), GUI’s (Graphical User Interfaces), and data modeling software.

Data is a corporate asset, and insights on the data is even more strategic. Sometimes overlooked as a best practice, data modeling is critical to understanding data, its interrelationships, and its rules.

Isn't it ironic that a technology that bears the label of “schema-less” is also known for the fact that schema design is one of its toughest challenges? ...